While most computer-chip makers over the previous a long time have touted the advantages of an ever-shrinking product, a well-funded Silicon Valley firm has constructed what it says is the biggest and fastest-ever pc chip, devoted to AI.

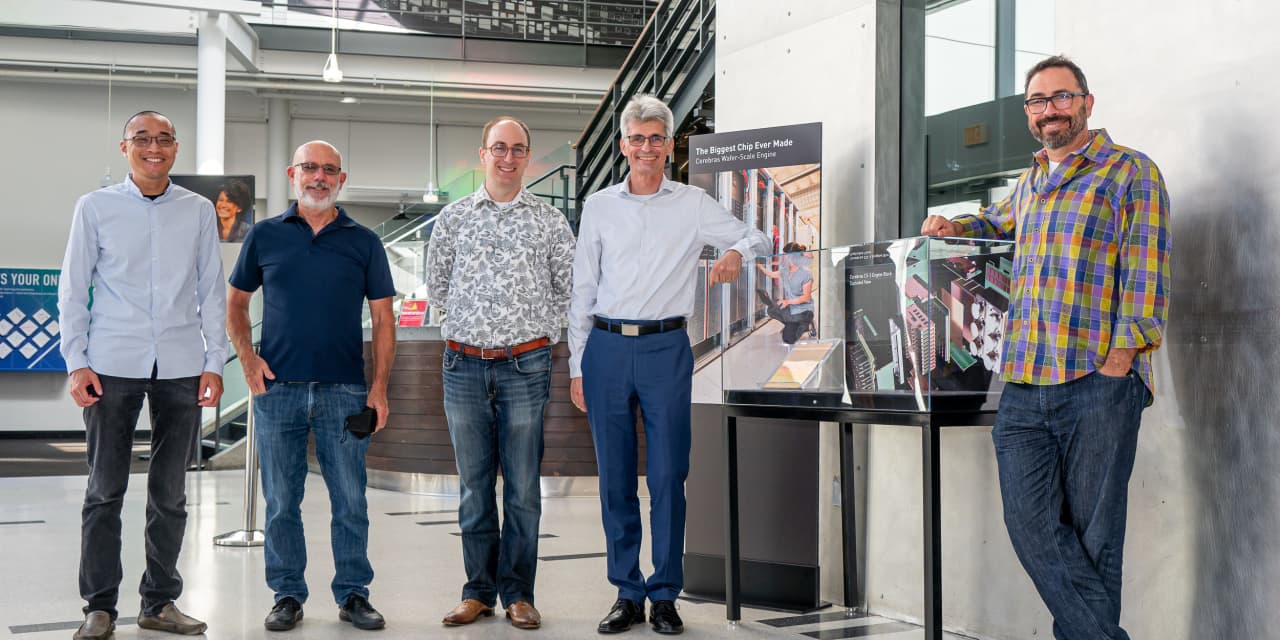

When the 5 mates who shaped Cerebras Systems Inc. determined to begin an organization, they needed to construct a brand new pc to handle an enormous downside. They had beforehand labored on compact, low-power servers for knowledge facilities at SeaMicro, later acquired by Advanced Micro Devices

AMD,

Cerebras was formally shaped in 2016, six years earlier than the debut of ChatGPT 3, however the founders determined again then to give attention to the robust computing downside of AI, the place it now competes with the business chief, Nvidia Corp.

NVDA,

in addition to different chip giants and startups.

Also learn: As AI matures, Nvidia gained’t be the one pick-and-shovel firm to thrive, BofA analysts say

“We built the largest part ever made, the fastest ever made,” Cerebras co-founder and Chief Executive Andrew Feldman mentioned. “We made a set of trade-offs. It is 100% for AI.”

After beginning up in downtown Los Altos, Calif., Cerebras is now in Sunnyvale, Calif., simply minutes away from data-center associate Colovore in close by Santa Clara, Calif. It now has greater than 5 occasions the workplace area, together with a loading dock {that a} {hardware} firm wants.

Cerebras has developed what it calls a wafer-scale engine with 2.6 trillion transistors and 850,000 cores, all on a silicon wafer about 8.5 inches large. The wafers have been transport since 2020, and are a part of a complete system designed particularly to course of queries and coaching for synthetic intelligence. And they’re making inroads as an alternative choice to Nvidia within the high-performance computing marketplace for AI programs.

“No one has built a chip this big in the history of compute,” Feldman informed MarketWatch, as he held up the dinner-plate-sized wafer. “This replaces the CPU and the GPU. This is the compute engine. This replaces everything made by Nvdia and Intel and AMD.” Last yr, Cerebras’ invention was inducted into Silicon Valley’s Computer History Museum, as the biggest pc chip on the earth.

Cerebras continues to be non-public, however with $720 million in enterprise funding, it is among the higher funded {hardware}/semiconductor startups. Several analysts imagine it is going to be considered one of a handful of AI chip startups to succeed. In its final Series F funding spherical in 2021, the corporate mentioned it had a valuation of $4 billion.

“They have come up with a very unique architecture,” mentioned Jim McGregor, an analyst with Tirias Research. “Because of the way their system is architected, they can handle enormous amounts of data. It’s not the best solution for every application, because it is obviously not cheap,. But it is an incredible solution for high-end data sets.” McGregor, quoting Nvidia CEO Jensen Huang, mentioned there can be each multi-purpose knowledge facilities operating AI, and specialised AI factories. “I would put [Cerebras] in that second category of AI factory,” he mentioned.

Cerebras’ programs are designed for devoted AI workloads, as a result of AI is so extremely processing-intensive, with its system designed to maintain all of the processing on the identical big chip. Feldman gave a easy analogy of watching a soccer recreation at dwelling with the beer already available, in contrast with having to go to a retailer to purchase some through the recreation. “All the communication is here, you are doing very small, short movements,” he mentioned. Just as you don’t must get into your automotive to purchase extra beer within the soccer recreation state of affairs, “you don’t have to go off-chip, you don’t have to wait for all the elements to be in place.”

Feldman declined to say what the corporate’s income is up to now, however mentioned it has doubled this yr. This summer season, Cerebras acquired an enormous enhance, with a significant contract valued initially at $100 million for the primary of 9 AI supercomputers to G42, a tech conglomerate within the United Arab Emirates. The first of these programs is operating dwell within the Colovore knowledge middle in Santa Clara, Calif., which has a white-glove service for patrons behind an unassuming workplace entrance on a again road lined with RV campers, situated a block from a Silicon Valley Power station. The proximity to the facility station has now change into an necessary function for knowledge facilities.

“This is the cloud,” Feldman mentioned, standing amid the loud, buzzing racks and water-cooled servers in an unlimited windowless room at Colovore. “It’s anything but serene.”

Sean Holzknecht, left, president of Colovore and Andrew Feldman, CEO of Cerebras, in a Colovore knowledge middle in Santa Clara, Calif.

Therese Poletti

Before the latest cope with G42, Cerebras’ buyer record was already a formidable assortment of high-performance computing prospects, together with pharmaceutical corporations GlaxoSmithKline

GSK,

for making higher predictions in drug discovery, and AstraZeneca

AZN,

for operating queries on a whole bunch of hundreds of abstracts and analysis papers. National laboratories together with Argonne National Labs, Lawrence Livermore National Laboratory, the Pittsburgh Supercomputing Center and several other others, are utilizing the programs to speed up analysis, simulate workloads and develop and check new analysis concepts.

“If they can keep their trajectory going, they could be one of the companies that survives,” mentioned Pat Moorhead, founder and chief analyst at Moor Insights and Strategy. “Ninety out of 100 companies will go out of business. But for the sole fact that they are driving some pretty impressive revenue, they can establish a niche. They have an architectural advantage.”

As massive firms and small companies alike rush to undertake AI to avoid wasting on labor prices with (hopefully) higher chatbots, conduct quicker analysis or assist do mundane duties, many have been ramping up spending on their knowledge facilities so as to add the additional computing energy that’s wanted. Nvidia has been one of many largest beneficiaries of that development, with its graphics processing models (GPUs) in big demand. Analysts estimate Nvidia at present has between 80% and 90% of the marketplace for AI-related chips.

“I like our odds,” mentioned Eric Vishria, a common associate at Benchmark Capital, one of many earliest traders in Cerebras, when requested about Cerebras’ potential to outlive and achieve an setting the place some AI chip startups are mentioned to be struggling. “I am not an investor in any of the others. I have no idea how well they are doing, in terms of revenue and actual customer traction,” he mentioned. “Cerebras is well ahead of the bunch as far as I understand it. It’s not easy, and one of the challenges has been that AI is moving and changing so fast.”

Feldman mentioned that, in fact, the subsequent milestone for the corporate could be an preliminary public providing, however he declined to provide any sort of timeframe.

“We have raised a huge amount of money and our investors need a return,” he mentioned when requested if the corporate plans to go public. “We don’t see that as a goal but as a by-product of a successful company. You build a company on enduring technology that changes an industry. That is why you get up every morning and start companies, to build cool things to move the industry.”

Source web site: www.marketwatch.com